Log in |

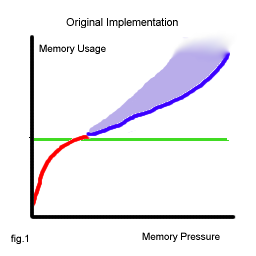

Why a Change Was NeededThe ZODB uses a memory cache to store objects in memory. Objects are moved into memory (from persistent storage) on demand, and are gradually removed from memory by a garbage collector which, in Zope, runs between each transaction or http request. The original ZODB memory cache responds poorly to memory pressure. By memory pressure, I mean the average number of objects touched between calls to the garbage collector. In Zope terms, this is the average number of objects used to calculate each page. The graph below (figure.1) shows how memory usage is determined by memory pressure. The green line marks the target size; the size that the cache is trying to maintain. The red and blue line marks the actual size that the cache will reach, for the given level of memory pressure. (Note; memory usage is mainly determined by cache size, and I use the two interchangably here). The original memory cache has the following undesirable characteristics.

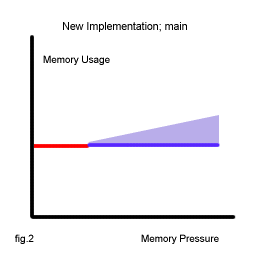

The main goal of this implementation is to flatten out this response to memory pressure, into the characeristic in figure.2 below.

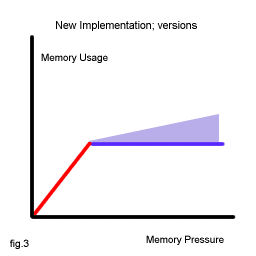

Note that the cache size is always exactly at the target size. Under memory pressure it grows slightly during the transaction since new objects have to be loaded into memory. However, the garbage collector always removes just enough objects to being the cache size down to the target size once the transaction is complete. There is one additional difference between the old and new implementation that I should mention here; the interpretation of target size. Under the old implementation this figure was the target number of objects in the cache. This includes both ghost and non-ghost objects, however only non-ghosts significantly contribute to memory usage. In the new implementation this figure is the target number of non-ghost objects. If you have carefully tuned this parameter then you may want to try a number 5 or 10 times smaller. If you are still using the default then you probably do not need to change anything. For caches used to store objects for a version, the target profile is slighly different:

If a version is not touched for a while then it is better for its size to gradually drop to zero, freeing up memory for other uses (perhaps, other versions). Other Articles |